AI systems are diverse from an architectural standpoint, but there’s one thing they all share in common: datasets. The trouble though is these large sample sizes often need loads of data for accuracy (a state-of-the-art diagnostic system by Google’s DeepMind subsidiary required 15,000 scans from 7,500 patients). Some datasets are frankly harder to find than others.

Nvidia, the Mayo Clinic, and the MGH and BWH Center for Clinical Data Science reckon they’ve come up with a solution: a neural network that generates it's own training data — specifically synthetic three-dimensional magnetic resonance images (MRIs) of brains with cancerous tumors.

By applying seemingly trivial concepts from one area to another — like using a GAN to create faces — breakthroughs like this can have a tangible positive impact. Look for Nvidia and team to fine-tune this approach to other types of cancer and disease in the brain to dramatically improve patient care.

“We show that for the first time we can generate brain images that can be used to train neural networks,” Hu Chang, a senior research scientist at Nvidia and a lead author on the paper, told VentureBeat in a phone interview.

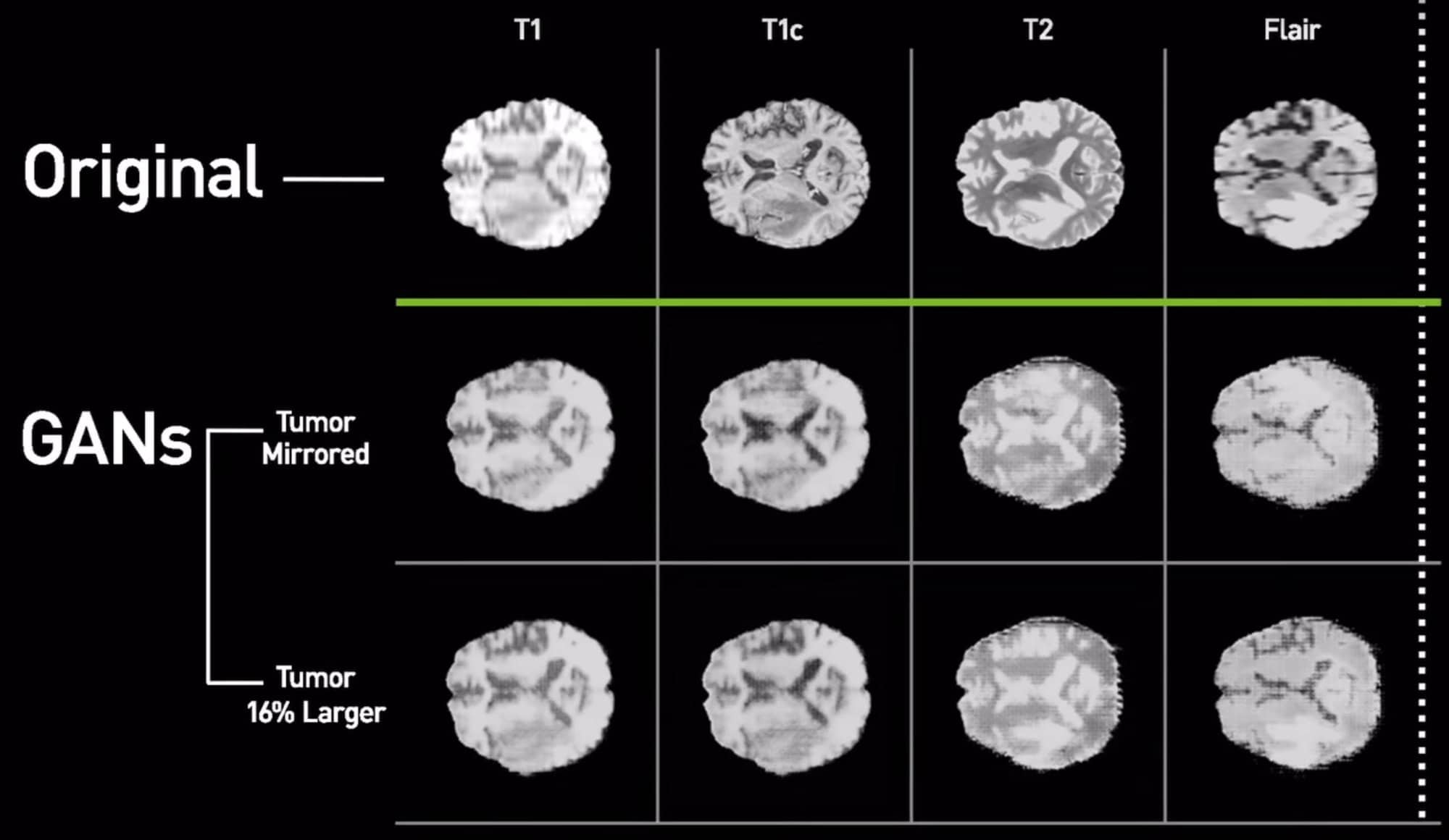

The AI system was developed using Facebook’s PyTorch deep learning framework and trained with an Nvidia DGX platform. It uses a generative adversarial network (GAN) — a two-part neural network consisting of a generator that produces samples and a discriminator, which attempts to distinguish between the generated samples and real-world samples — to create convincing MRIs of abnormal brains.

The team sourced two publicly available datasets — the Alzheimer’s Disease Neuroimaging Initiative (ADNI) and the Multimodal Brain Tumor Image Segmentation Benchmark (BRATS) — to train the GAN, and set aside 20 percent of BRATS’ 264 studies for performance testing. Memory and compute restraints forced the team to downsample the scan from a resolution of 256 x 256 x 108 to 128 x 128 x 54, but they used the original images for comparison.

The generator was fed images from ADNI and , learned to produce synthetic brain scans (complete wit white matter, grey matter, and cerebral spinal fluid) from an image from the ADNI. Next, when it was set loose on the BRATS dataset it generated full segmentations with tumors.

This annotated the scans which take a team of human experts hours. But how’d it do? When the team trained a machine learning model using a combination of real brain scans and synthetic brain scans produced by the GAN, it achieved 80 percent accuracy — 14 percent better than a model trained on actual data alone.

“Many radiologists we’ve shown the system have expressed excitement,” Chang said. “They want to use it to generate more examples of rare diseases.”

It’s not the first time Nvidia have employed GANs in transforming brain scans. This summer, they demonstrated a system that could convert CT scans into 2D MRIs while another system could align MRI images in the same scene with superior speed and accuracy.

Leave a Reply